Commits on Source (27)

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

-

Yifan Zhao authored

Showing

- .readthedocs.yaml 12 additions, 0 deletions.readthedocs.yaml

- LICENSE 28 additions, 15 deletionsLICENSE

- README.md 0 additions, 56 deletionsREADME.md

- README.rst 15 additions, 0 deletionsREADME.rst

- doc/README.md 1 addition, 1 deletiondoc/README.md

- doc/_static/result_no_model.png 0 additions, 0 deletionsdoc/_static/result_no_model.png

- doc/_static/result_with_model.png 0 additions, 0 deletionsdoc/_static/result_with_model.png

- doc/conf.py 10 additions, 57 deletionsdoc/conf.py

- doc/getting_started.rst 60 additions, 21 deletionsdoc/getting_started.rst

- doc/reference/approx-app.rst 14 additions, 0 deletionsdoc/reference/approx-app.rst

- doc/reference/index.rst 13 additions, 9 deletionsdoc/reference/index.rst

- doc/reference/modeled-app.rst 41 additions, 0 deletionsdoc/reference/modeled-app.rst

- doc/reference/pytorch-app.rst 17 additions, 0 deletionsdoc/reference/pytorch-app.rst

- doc/requirements.txt 3 additions, 0 deletionsdoc/requirements.txt

- doc/tuning_result.png 0 additions, 0 deletionsdoc/tuning_result.png

- examples/tune_vgg16_cifar10.py 3 additions, 2 deletionsexamples/tune_vgg16_cifar10.py

- predtuner/__init__.py 2 additions, 2 deletionspredtuner/__init__.py

- predtuner/approxapp.py 288 additions, 97 deletionspredtuner/approxapp.py

- predtuner/approxes/approxes.py 15 additions, 0 deletionspredtuner/approxes/approxes.py

- predtuner/modeledapp.py 254 additions, 127 deletionspredtuner/modeledapp.py

.readthedocs.yaml

0 → 100644

README.md

deleted

100644 → 0

README.rst

0 → 100644

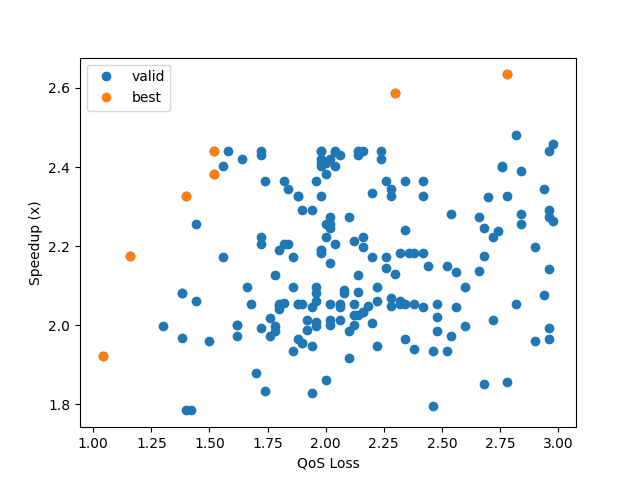

doc/_static/result_no_model.png

0 → 100644

216 KiB

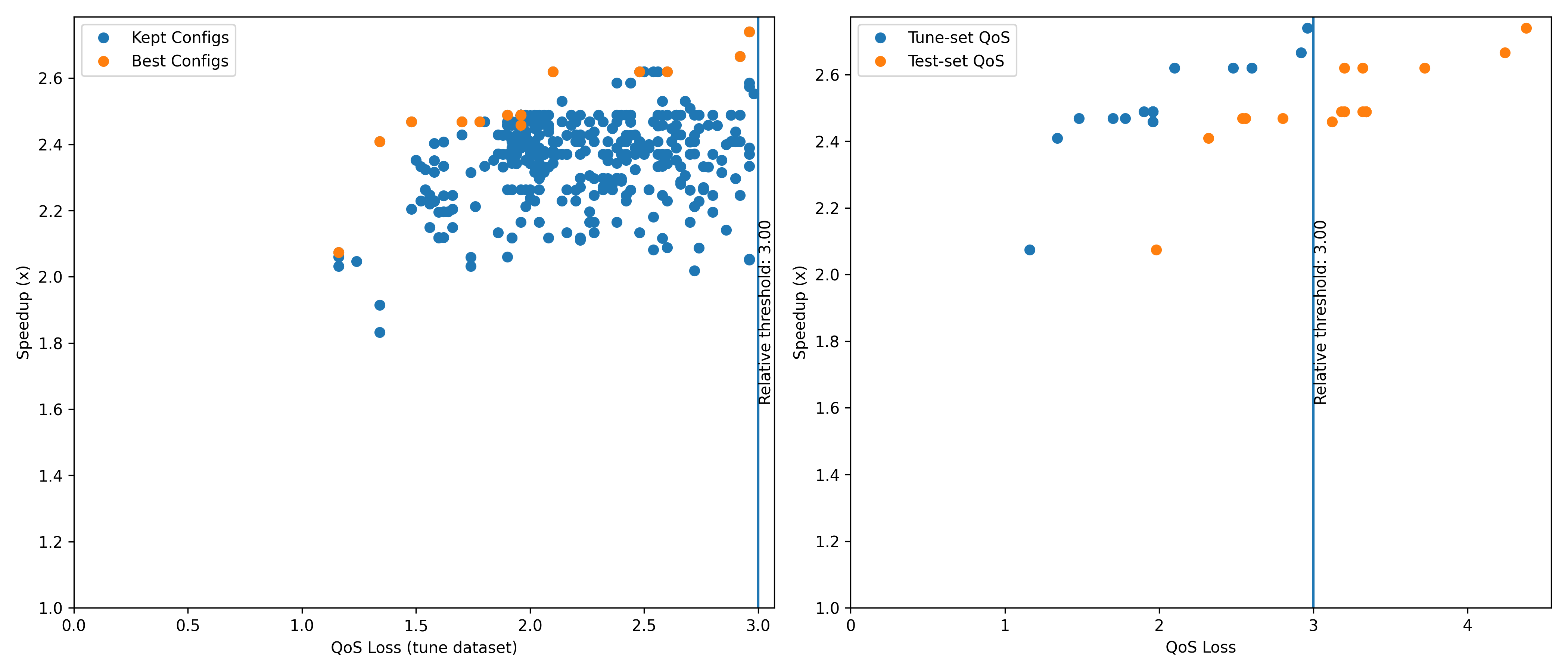

doc/_static/result_with_model.png

0 → 100644

253 KiB

doc/reference/approx-app.rst

0 → 100644

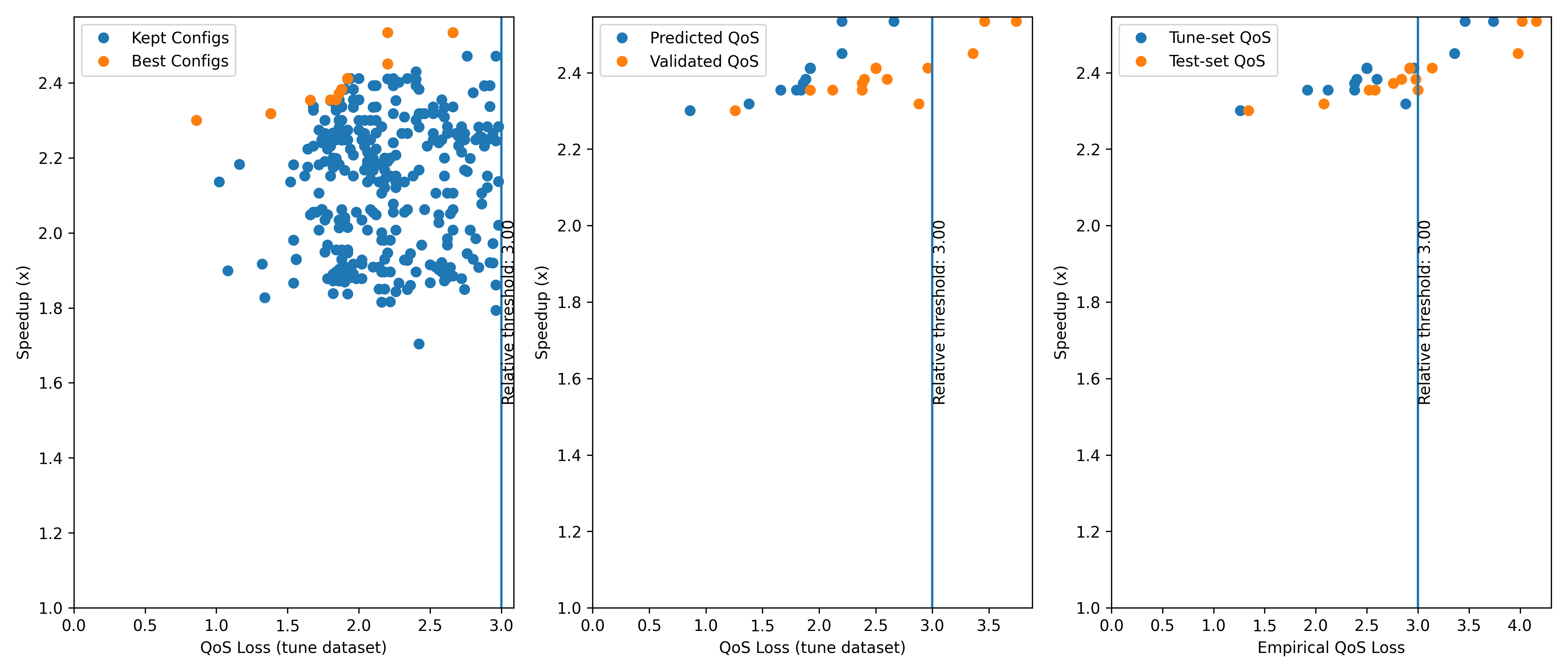

doc/reference/modeled-app.rst

0 → 100644

doc/reference/pytorch-app.rst

0 → 100644

doc/requirements.txt

0 → 100644

doc/tuning_result.png

deleted

100644 → 0

26.3 KiB