- Feb 16, 2018

-

-

Shintaro Murakami authored

## What changes were proposed in this pull request? Murmur3 hash generates a different value from the original and other implementations (like Scala standard library and Guava or so) when the length of a bytes array is not multiple of 4. ## How was this patch tested? Added a unit test. **Note: When we merge this PR, please give all the credits to Shintaro Murakami.** Author: Shintaro Murakami <mrkm4ntrgmail.com> Author: gatorsmile <gatorsmile@gmail.com> Author: Shintaro Murakami <mrkm4ntr@gmail.com> Closes #20630 from gatorsmile/pr-20568.

-

- Feb 15, 2018

-

-

Liang-Chi Hsieh authored

## What changes were proposed in this pull request? #### Problem: Since 2.3, `Bucketizer` supports multiple input/output columns. We will check if exclusive params are set during transformation. E.g., if `inputCols` and `outputCol` are both set, an error will be thrown. However, when we write `Bucketizer`, looks like the default params and user-supplied params are merged during writing. All saved params are loaded back and set to created model instance. So the default `outputCol` param in `HasOutputCol` trait will be set in `paramMap` and become an user-supplied param. That makes the check of exclusive params failed. #### Fix: This changes the saving logic of Bucketizer to handle this case. This is a quick fix to catch the time of 2.3. We should consider modify the persistence mechanism later. Please see the discussion in the JIRA. Note: The multi-column `QuantileDiscretizer` also has the same issue. ## How was this patch tested? Modified tests. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #20594 from viirya/SPARK-23377-2.

-

- Feb 13, 2018

-

-

Marco Gaido authored

## What changes were proposed in this pull request? The PR provided an implementation of ClusteringEvaluator using the cosine distance measure. This allows to evaluate clustering results created using the cosine distance, introduced in SPARK-22119. In the corresponding JIRA, there is a design document for the algorithm implemented here. ## How was this patch tested? Added UT which compares the result to the one provided by python sklearn. Author: Marco Gaido <marcogaido91@gmail.com> Closes #20396 from mgaido91/SPARK-23217.

-

xubo245 authored

## What changes were proposed in this pull request? Add some test cases for images feature ## How was this patch tested? Add some test cases in ImageSchemaSuite Author: xubo245 <601450868@qq.com> Closes #20583 from xubo245/CARBONDATA23392_AddTestForImage.

-

Arseniy Tashoyan authored

## What changes were proposed in this pull request? Cache the RDD of items in ml.FPGrowth before passing it to mllib.FPGrowth. Cache only when the user did not cache the input dataset of transactions. This fixes the warning about uncached data emerging from mllib.FPGrowth. ## How was this patch tested? Manually: 1. Run ml.FPGrowthExample - warning is there 2. Apply the fix 3. Run ml.FPGrowthExample again - no warning anymore Author: Arseniy Tashoyan <tashoyan@gmail.com> Closes #20578 from tashoyan/SPARK-23318.

-

- Feb 11, 2018

-

-

Marco Gaido authored

## What changes were proposed in this pull request? In #19340 some comments considered needed to use spherical KMeans when cosine distance measure is specified, as Matlab does; instead of the implementation based on the behavior of other tools/libraries like Rapidminer, nltk and ELKI, ie. the centroids are computed as the mean of all the points in the clusters. The PR introduce the approach used in spherical KMeans. This behavior has the nice feature to minimize the within-cluster cosine distance. ## How was this patch tested? existing/improved UTs Author: Marco Gaido <marcogaido91@gmail.com> Closes #20518 from mgaido91/SPARK-22119_followup.

-

- Feb 01, 2018

-

-

Yanbo Liang authored

## What changes were proposed in this pull request? Audit new APIs and docs in 2.3.0. ## How was this patch tested? No test. Author: Yanbo Liang <ybliang8@gmail.com> Closes #20459 from yanboliang/SPARK-23107.

-

- Jan 28, 2018

-

-

Yacine Mazari authored

## What changes were proposed in this pull request? Currently, the CountVectorizer has a minDF parameter. It might be useful to also have a maxDF parameter. It will be used as a threshold for filtering all the terms that occur very frequently in a text corpus, because they are not very informative or could even be stop-words. This is analogous to scikit-learn, CountVectorizer, max_df. Other changes: - Refactored code to invoke "filter()" conditioned on maxDF or minDF set. - Refactored code to unpersist input after counting is done. ## How was this patch tested? Unit tests. Author: Yacine Mazari <y.mazari@gmail.com> Closes #20367 from ymazari/SPARK-23166.

-

- Jan 26, 2018

-

-

Xingbo Jiang authored

## What changes were proposed in this pull request? Currently shuffle repartition uses RoundRobinPartitioning, the generated result is nondeterministic since the sequence of input rows are not determined. The bug can be triggered when there is a repartition call following a shuffle (which would lead to non-deterministic row ordering), as the pattern shows below: upstream stage -> repartition stage -> result stage (-> indicate a shuffle) When one of the executors process goes down, some tasks on the repartition stage will be retried and generate inconsistent ordering, and some tasks of the result stage will be retried generating different data. The following code returns 931532, instead of 1000000: ``` import scala.sys.process._ import org.apache.spark.TaskContext val res = spark.range(0, 1000 * 1000, 1).repartition(200).map { x => x }.repartition(200).map { x => if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 2) { throw new Exception("pkill -f java".!!) } x } res.distinct().count() ``` In this PR, we propose a most straight-forward way to fix this problem by performing a local sort before partitioning, after we make the input row ordering deterministic, the function from rows to partitions is fully deterministic too. The downside of the approach is that with extra local sort inserted, the performance of repartition() will go down, so we add a new config named `spark.sql.execution.sortBeforeRepartition` to control whether this patch is applied. The patch is default enabled to be safe-by-default, but user may choose to manually turn it off to avoid performance regression. This patch also changes the output rows ordering of repartition(), that leads to a bunch of test cases failure because they are comparing the results directly. ## How was this patch tested? Add unit test in ExchangeSuite. With this patch(and `spark.sql.execution.sortBeforeRepartition` set to true), the following query returns 1000000: ``` import scala.sys.process._ import org.apache.spark.TaskContext spark.conf.set("spark.sql.execution.sortBeforeRepartition", "true") val res = spark.range(0, 1000 * 1000, 1).repartition(200).map { x => x }.repartition(200).map { x => if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 2) { throw new Exception("pkill -f java".!!) } x } res.distinct().count() res7: Long = 1000000 ``` Author: Xingbo Jiang <xingbo.jiang@databricks.com> Closes #20393 from jiangxb1987/shuffle-repartition. -

Marco Gaido authored

## What changes were proposed in this pull request? Currently there is a mixed situation when both single- and multi-column are supported. In some cases exceptions are thrown, in others only a warning log is emitted. In this discussion https://issues.apache.org/jira/browse/SPARK-8418?focusedCommentId=16275049&page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel#comment-16275049, the decision was to throw an exception. The PR throws an exception in `Bucketizer`, instead of logging a warning. ## How was this patch tested? modified UT Author: Marco Gaido <marcogaido91@gmail.com> Author: Joseph K. Bradley <joseph@databricks.com> Closes #19993 from mgaido91/SPARK-22799.

-

- Jan 25, 2018

-

-

Sid Murching authored

[SPARK-23205][ML] Update ImageSchema.readImages to correctly set alpha values for four-channel images ## What changes were proposed in this pull request? When parsing raw image data in ImageSchema.decode(), we use a [java.awt.Color](https://docs.oracle.com/javase/7/docs/api/java/awt/Color.html#Color(int)) constructor that sets alpha = 255, even for four-channel images (which may have different alpha values). This PR fixes this issue & adds a unit test to verify correctness of reading four-channel images. ## How was this patch tested? Updates an existing unit test ("readImages pixel values test" in `ImageSchemaSuite`) to also verify correctness when reading a four-channel image. Author: Sid Murching <sid.murching@databricks.com> Closes #20389 from smurching/image-schema-bugfix.

-

- Jan 24, 2018

-

-

Matthew Tovbin authored

## What changes were proposed in this pull request? Correctly guard against empty datasets in `org.apache.spark.ml.classification.Classifier` ## How was this patch tested? existing tests Author: Matthew Tovbin <mtovbin@salesforce.com> Closes #20321 from tovbinm/SPARK-23152.

-

- Jan 21, 2018

-

-

Marco Gaido authored

## What changes were proposed in this pull request? Currently, KMeans assumes the only possible distance measure to be used is the Euclidean. This PR aims to add the cosine distance support to the KMeans algorithm. ## How was this patch tested? existing and added UTs. Author: Marco Gaido <marcogaido91@gmail.com> Author: Marco Gaido <mgaido@hortonworks.com> Closes #19340 from mgaido91/SPARK-22119.

-

- Jan 19, 2018

-

-

Zheng RuiFeng authored

## What changes were proposed in this pull request? `ML.Vectors#sparse(size: Int, elements: Seq[(Int, Double)])` support zero-length ## How was this patch tested? existing tests Author: Zheng RuiFeng <ruifengz@foxmail.com> Closes #20275 from zhengruifeng/SparseVector_size.

-

- Jan 17, 2018

-

-

Bryan Cutler authored

## What changes were proposed in this pull request? Fixed some typos found in ML scaladocs ## How was this patch tested? NA Author: Bryan Cutler <cutlerb@gmail.com> Closes #20300 from BryanCutler/ml-doc-typos-MINOR.

-

- Jan 16, 2018

-

-

Bago Amirbekian authored

## What changes were proposed in this pull request? RFormula should use VectorSizeHint & OneHotEncoderEstimator in its pipeline to avoid using the deprecated OneHotEncoder & to ensure the model produced can be used in streaming. ## How was this patch tested? Unit tests. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Bago Amirbekian <bago@databricks.com> Closes #20229 from MrBago/rFormula.

-

- Jan 12, 2018

-

-

gatorsmile authored

## What changes were proposed in this pull request? This patch bumps the master branch version to `2.4.0-SNAPSHOT`. ## How was this patch tested? N/A Author: gatorsmile <gatorsmile@gmail.com> Closes #20222 from gatorsmile/bump24.

-

- Jan 11, 2018

-

-

Bago Amirbekian authored

## What changes were proposed in this pull request? Including VectorSizeHint in RFormula piplelines will allow them to be applied to streaming dataframes. ## How was this patch tested? Unit tests. Author: Bago Amirbekian <bago@databricks.com> Closes #20238 from MrBago/rFormulaVectorSize.

-

- Jan 10, 2018

-

-

sethah authored

## What changes were proposed in this pull request? Add a note to the `HasCheckpointInterval` parameter doc that clarifies that this setting is ignored when no checkpoint directory has been set on the spark context. ## How was this patch tested? No tests necessary, just a doc update. Author: sethah <shendrickson@cloudera.com> Closes #20188 from sethah/als_checkpoint_doc.

-

- Jan 05, 2018

-

-

Joseph K. Bradley authored

## What changes were proposed in this pull request? Follow-up cleanups for the OneHotEncoderEstimator PR. See some discussion in the original PR: https://github.com/apache/spark/pull/19527 or read below for what this PR includes: * configedCategorySize: I reverted this to return an Array. I realized the original setup (which I had recommended in the original PR) caused the whole model to be serialized in the UDF. * encoder: I reorganized the logic to show what I meant in the comment in the previous PR. I think it's simpler but am open to suggestions. I also made some small style cleanups based on IntelliJ warnings. ## How was this patch tested? Existing unit tests Author: Joseph K. Bradley <joseph@databricks.com> Closes #20132 from jkbradley/viirya-SPARK-13030.

-

Bago Amirbekian authored

[SPARK-22949][ML] Apply CrossValidator approach to Driver/Distributed memory tradeoff for TrainValidationSplit ## What changes were proposed in this pull request? Avoid holding all models in memory for `TrainValidationSplit`. ## How was this patch tested? Existing tests. Author: Bago Amirbekian <bago@databricks.com> Closes #20143 from MrBago/trainValidMemoryFix.

-

- Jan 01, 2018

-

-

Sean Owen authored

-

- Dec 31, 2017

-

-

Liang-Chi Hsieh authored

## What changes were proposed in this pull request? This patch adds a new class `OneHotEncoderEstimator` which extends `Estimator`. The `fit` method returns `OneHotEncoderModel`. Common methods between existing `OneHotEncoder` and new `OneHotEncoderEstimator`, such as transforming schema, are extracted and put into `OneHotEncoderCommon` to reduce code duplication. ### Multi-column support `OneHotEncoderEstimator` adds simpler multi-column support because it is new API and can be free from backward compatibility. ### handleInvalid Param support `OneHotEncoderEstimator` supports `handleInvalid` Param. It supports `error` and `keep`. ## How was this patch tested? Added new test suite `OneHotEncoderEstimatorSuite`. Author: Liang-Chi Hsieh <viirya@gmail.com> Closes #19527 from viirya/SPARK-13030.

-

Nick Pentreath authored

Previously, `FeatureHasher` always treats numeric type columns as numbers and never as categorical features. It is quite common to have categorical features represented as numbers or codes in data sources. In order to hash these features as categorical, users must first explicitly convert them to strings which is cumbersome. Add a new param `categoricalCols` which specifies the numeric columns that should be treated as categorical features. ## How was this patch tested? New unit tests. Author: Nick Pentreath <nickp@za.ibm.com> Closes #19991 from MLnick/hasher-num-cat.

-

Huaxin Gao authored

## What changes were proposed in this pull request? add multi columns support to QuantileDiscretizer. When calculating the splits, we can either merge together all the probabilities into one array by calculating approxQuantiles on multiple columns at once, or compute approxQuantiles separately for each column. After doing the performance comparision, we found it’s better to calculating approxQuantiles on multiple columns at once. Here is how we measuring the performance time: ``` var duration = 0.0 for (i<- 0 until 10) { val start = System.nanoTime() discretizer.fit(df) val end = System.nanoTime() duration += (end - start) / 1e9 } println(duration/10) ``` Here is the performance test result: |numCols |NumRows | compute each approxQuantiles separately|compute multiple columns approxQuantiles at one time| |--------|----------|--------------------------------|-------------------------------------------| |10 |60 |0.3623195839 |0.1626658607 | |10 |6000 |0.7537239841 |0.3869370046 | |22 |6000 |1.6497598557 |0.4767903059 | |50 |6000 |3.2268305752 |0.7217818396 | ## How was this patch tested? add UT in QuantileDiscretizerSuite to test multi columns supports Author: Huaxin Gao <huaxing@us.ibm.com> Closes #19715 from huaxingao/spark_22397.

-

- Dec 29, 2017

-

-

WeichenXu authored

## What changes were proposed in this pull request? ML regression package testsuite add StructuredStreaming test In order to make testsuite easier to modify, new helper function added in `MLTest`: ``` def testTransformerByGlobalCheckFunc[A : Encoder]( dataframe: DataFrame, transformer: Transformer, firstResultCol: String, otherResultCols: String*) (globalCheckFunction: Seq[Row] => Unit): Unit ``` ## How was this patch tested? N/A Author: WeichenXu <weichen.xu@databricks.com> Author: Bago Amirbekian <bago@databricks.com> Closes #19979 from WeichenXu123/ml_stream_test. -

Bago Amirbekian authored

(Please fill in changes proposed in this fix) Python API for VectorSizeHint Transformer. (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests) doc-tests. Author: Bago Amirbekian <bago@databricks.com> Closes #20112 from MrBago/vectorSizeHint-PythonAPI.

-

Zheng RuiFeng authored

## What changes were proposed in this pull request? make sure model data is stored in order. WeichenXu123 ## How was this patch tested? existing tests Author: Zheng RuiFeng <ruifengz@foxmail.com> Closes #20113 from zhengruifeng/gmm_save.

-

- Dec 28, 2017

-

-

WeichenXu authored

## What changes were proposed in this pull request? Currently, in `ChiSqSelectorModel`, save: ``` spark.createDataFrame(dataArray).repartition(1).write... ``` The default partition number used by createDataFrame is "defaultParallelism", Current RoundRobinPartitioning won't guarantee the "repartition" generating the same order result with local array. We need fix it. ## How was this patch tested? N/A Author: WeichenXu <weichen.xu@databricks.com> Closes #20088 from WeichenXu123/fix_chisq_model_save.

-

- Dec 27, 2017

-

-

WeichenXu authored

## What changes were proposed in this pull request? Fix OneVsRestModel transform on streaming data failed. ## How was this patch tested? UT will be added soon, once #19979 merged. (Need a helper test method there) Author: WeichenXu <weichen.xu@databricks.com> Closes #20077 from WeichenXu123/fix_ovs_model_transform.

-

- Dec 25, 2017

-

-

WeichenXu authored

## What changes were proposed in this pull request? Via some test I found CrossValidator still exists memory issue, it will still occupy `O(n*sizeof(model))` memory for holding models when fitting, if well optimized, it should be `O(parallelism*sizeof(model))` This is because modelFutures will hold the reference to model object after future is complete (we can use `future.value.get.get` to fetch it), and the `Future.sequence` and the `modelFutures` array holds references to each model future. So all model object are keep referenced. So it will still occupy `O(n*sizeof(model))` memory. I fix this by merging the `modelFuture` and `foldMetricFuture` together, and use `atomicInteger` to statistic complete fitting tasks and when all done, trigger `trainingDataset.unpersist`. I ever commented this issue on the old PR [SPARK-19357] https://github.com/apache/spark/pull/16774#pullrequestreview-53674264 unfortunately, at that time I do not realize that the issue still exists, but now I confirm it and create this PR to fix it. ## Discussion I give 3 approaches which we can compare, after discussion I realized none of them is ideal, we have to make a trade-off. **After discussion with jkbradley , choose approach 3** ### Approach 1 ~~The approach proposed by MrBago at~~ https://github.com/apache/spark/pull/19904#discussion_r156751569 ~~This approach resolve the model objects referenced issue, allow the model objects to be GCed in time. **BUT, in some cases, it still do not resolve the O(N) model memory occupation issue**. Let me use an extreme case to describe it:~~ ~~suppose we set `parallelism = 1`, and there're 100 paramMaps. So we have 100 fitting & evaluation tasks. In this approach, because of `parallelism = 1`, the code have to wait 100 fitting tasks complete, **(at this time the memory occupation by models already reach 100 * sizeof(model) )** and then it will unpersist training dataset and then do 100 evaluation tasks.~~ ### Approach 2 ~~This approach is my PR old version code~~ https://github.com/apache/spark/pull/19904/commits/2cc7c28f385009570536690d686f2843485942b2 ~~This approach can make sure at any case, the peak memory occupation by models to be `O(numParallelism * sizeof(model))`, but, it exists an issue that, in some extreme case, the "unpersist training dataset" will be delayed until most of the evaluation tasks complete. Suppose the case `parallelism = 1`, and there're 100 fitting & evaluation tasks, each fitting&evaluation task have to be executed one by one, so only after the first 99 fitting&evaluation tasks and the 100th fitting task complete, the "unpersist training dataset" will be triggered.~~ ### Approach 3 After I compared approach 1 and approach 2, I realized that, in the case which parallelism is low but there're many fitting & evaluation tasks, we cannot achieve both of the following two goals: - Make the peak memory occupation by models(driver-side) to be O(parallelism * sizeof(model)) - unpersist training dataset before most of the evaluation tasks started. So I vote for a simpler approach, move the unpersist training dataset to the end (Does this really matters ?) Because the goal 1 is more important, we must make sure the peak memory occupation by models (driver-side) to be O(parallelism * sizeof(model)), otherwise it will bring high risk of OOM. Like following code: ``` val foldMetricFutures = epm.zipWithIndex.map { case (paramMap, paramIndex) => Future[Double] { val model = est.fit(trainingDataset, paramMap).asInstanceOf[Model[_]] //...other minor codes val metric = eval.evaluate(model.transform(validationDataset, paramMap)) logDebug(s"Got metric metricformodeltrainedwithparamMap.") metric } (executionContext) } val foldMetrics = foldMetricFutures.map(ThreadUtils.awaitResult(_, Duration.Inf)) trainingDataset.unpersist() // <------- unpersist at the end validationDataset.unpersist() ``` ## How was this patch tested? N/A Author: WeichenXu <weichen.xu@databricks.com> Closes #19904 from WeichenXu123/fix_cross_validator_memory_issue.

-

- Dec 22, 2017

-

-

Bago Amirbekian authored

## What changes were proposed in this pull request? A new VectorSizeHint transformer was added. This transformer is meant to be used as a pipeline stage ahead of VectorAssembler, on vector columns, so that VectorAssembler can join vectors in a streaming context where the size of the input vectors is otherwise not known. ## How was this patch tested? Unit tests. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Bago Amirbekian <bago@databricks.com> Closes #19746 from MrBago/vector-size-hint.

-

- Dec 21, 2017

-

-

Zheng RuiFeng authored

[SPARK-22450][CORE][MLLIB][FOLLOWUP] safely register class for mllib - LabeledPoint/VectorWithNorm/TreePoint ## What changes were proposed in this pull request? register following classes in Kryo: `org.apache.spark.mllib.regression.LabeledPoint` `org.apache.spark.mllib.clustering.VectorWithNorm` `org.apache.spark.ml.feature.LabeledPoint` `org.apache.spark.ml.tree.impl.TreePoint` `org.apache.spark.ml.tree.impl.BaggedPoint` seems also need to be registered, but I don't know how to do it in this safe way. WeichenXu123 cloud-fan ## How was this patch tested? added tests Author: Zheng RuiFeng <ruifengz@foxmail.com> Closes #19950 from zhengruifeng/labeled_kryo.

-

- Dec 20, 2017

-

-

WeichenXu authored

## What changes were proposed in this pull request? Make several improvements in dataframe vectorized summarizer. 1. Make the summarizer return `Vector` type for all metrics (except "count"). It will return "WrappedArray" type before which won't be very convenient. 2. Make `MetricsAggregate` inherit `ImplicitCastInputTypes` trait. So it can check and implicitly cast input values. 3. Add "weight" parameter for all single metric method. 4. Update doc and improve the example code in doc. 5. Simplified test cases. ## How was this patch tested? Test added and simplified. Author: WeichenXu <weichen.xu@databricks.com> Closes #19156 from WeichenXu123/improve_vec_summarizer.

-

Zheng RuiFeng authored

## What changes were proposed in this pull request? unpersist unused datasets ## How was this patch tested? existing tests and local check in Spark-Shell Author: Zheng RuiFeng <ruifengz@foxmail.com> Closes #20017 from zhengruifeng/bkm_unpersist.

-

- Dec 13, 2017

-

-

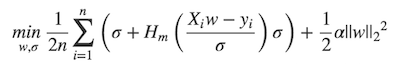

Yanbo Liang authored

## What changes were proposed in this pull request? MLlib ```LinearRegression``` supports _huber_ loss addition to _leastSquares_ loss. The huber loss objective function is:  Refer Eq.(6) and Eq.(8) in [A robust hybrid of lasso and ridge regression](http://statweb.stanford.edu/~owen/reports/hhu.pdf). This objective is jointly convex as a function of (w, σ) ∈ R × (0,∞), we can use L-BFGS-B to solve it. The current implementation is a straight forward porting for Python scikit-learn [```HuberRegressor```](http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.HuberRegressor.html). There are some differences: * We use mean loss (```lossSum/weightSum```), but sklearn uses total loss (```lossSum```). * We multiply the loss function and L2 regularization by 1/2. It does not affect the result if we multiply the whole formula by a factor, we just keep consistent with _leastSquares_ loss. So if fitting w/o regularization, MLlib and sklearn produce the same output. If fitting w/ regularization, MLlib should set ```regParam``` divide by the number of instances to match the output of sklearn. ## How was this patch tested? Unit tests. Author: Yanbo Liang <ybliang8@gmail.com> Closes #19020 from yanboliang/spark-3181.

-

Ruifeng Zheng authored

## What changes were proposed in this pull request? only drops the rows containing NaN in the input columns ## How was this patch tested? existing tests and added tests Author: Ruifeng Zheng <ruifengz@foxmail.com> Author: Zheng RuiFeng <ruifengz@foxmail.com> Closes #19894 from zhengruifeng/bucketizer_nan.

-

- Dec 12, 2017

-

-

WeichenXu authored

## What changes were proposed in this pull request? We need to add some helper code to make testing ML transformers & models easier with streaming data. These tests might help us catch any remaining issues and we could encourage future PRs to use these tests to prevent new Models & Transformers from having issues. I add a `MLTest` trait which extends `StreamTest` trait, and override `createSparkSession`. So ML testsuite can only extend `MLTest`, to use both ML & Stream test util functions. I only modify one testcase in `LinearRegressionSuite`, for first pass review. Link to #19746 ## How was this patch tested? `MLTestSuite` added. Author: WeichenXu <weichen.xu@databricks.com> Closes #19843 from WeichenXu123/ml_stream_test_helper.

-

Yanbo Liang authored

## What changes were proposed in this pull request? #19208 modified ```sharedParams.scala```, but didn't generated by ```SharedParamsCodeGen.scala```. This involves mismatch between them. ## How was this patch tested? Existing test. Author: Yanbo Liang <ybliang8@gmail.com> Closes #19958 from yanboliang/spark-21087.

-

Yuhao Yang authored

## What changes were proposed in this pull request? jira: https://issues.apache.org/jira/browse/SPARK-22289 add JSON encoding/decoding for Param[Matrix]. The issue was reported by Nic Eggert during saving LR model with LowerBoundsOnCoefficients. There're two ways to resolve this as I see: 1. Support save/load on LogisticRegressionParams, and also adjust the save/load in LogisticRegression and LogisticRegressionModel. 2. Directly support Matrix in Param.jsonEncode, similar to what we have done for Vector. After some discussion in jira, we prefer the fix to support Matrix as a valid Param type, for simplicity and convenience for other classes. Note that in the implementation, I added a "class" field in the JSON object to match different JSON converters when loading, which is for preciseness and future extension. ## How was this patch tested? new unit test to cover the LR case and JsonMatrixConverter Author: Yuhao Yang <yuhao.yang@intel.com> Closes #19525 from hhbyyh/lrsave.

-